The Prompt That Makes My Local Coding Agent Possible

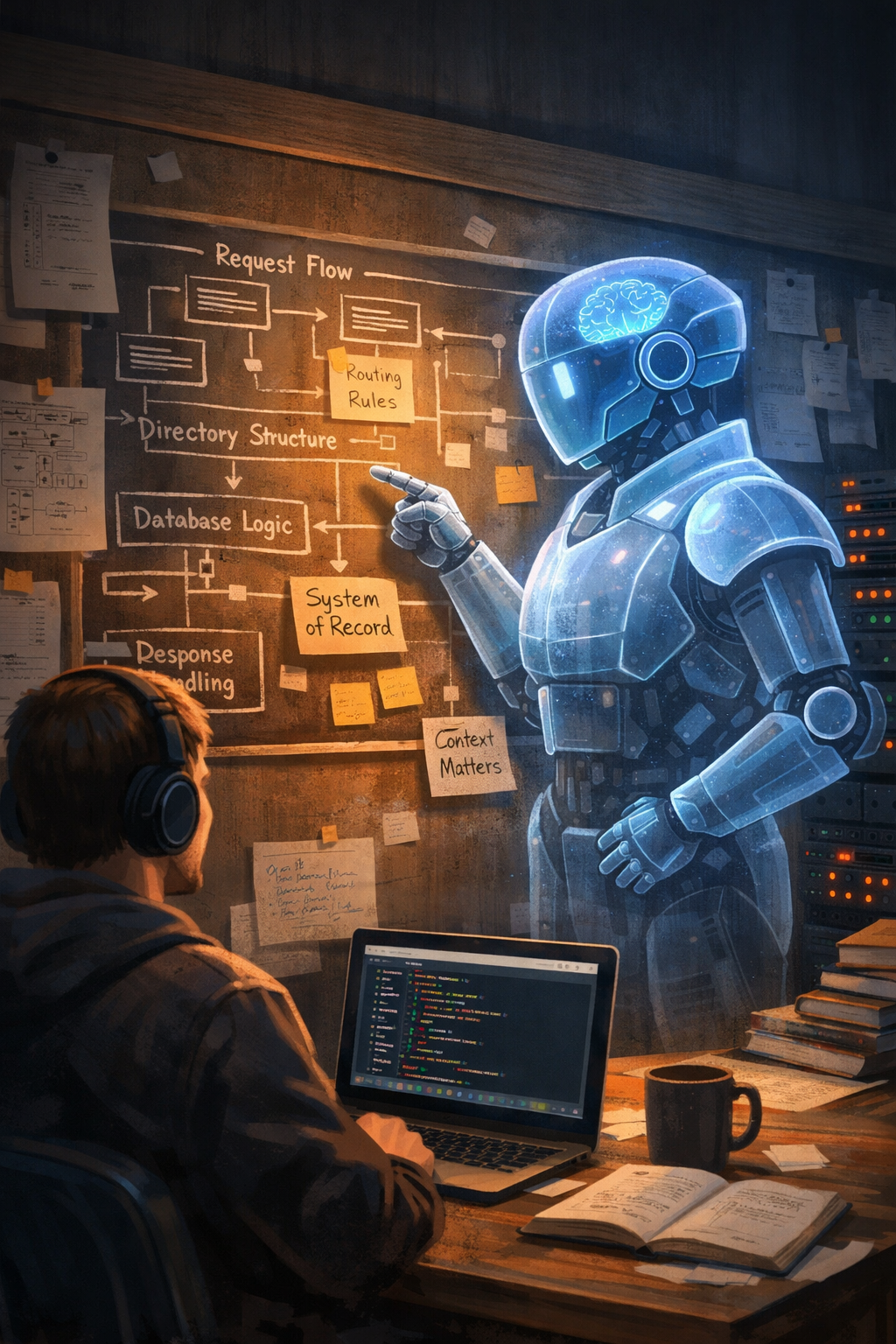

This isn’t about prompt engineering or better answers. It’s about orientation. I describe the context prompt I use with my local coding agent, and why telling an AI where it is matters more than telling it what to do. If you want it slightly sharper, warmer, or more technical, say the word and we’ll dial it in.

I don’t start a coding session with my local AI agent by asking it to help me write some code. I start by telling it where it is. Not metaphorically. Literally. What kind of system it’s inside, what assumptions do and do not hold, and what kinds of mistakes are expensive here.

That distinction turns out to matter more than any clever instruction that follows.

This piece is about the context prompt I use to initialize a local agent while coding through the OpenAI harness in VS Code on Ubuntu. It isn’t a trick, and it isn’t about squeezing better answers out of a model. It’s about making collaboration possible at all in a system that has memory, consequence, and continuity.

AdTap, and its primary deployment at The Mountain Eagle, is not a greenfield app. It’s not a playground. It’s a system of record. It carries money, identity, workflows, audit trails, and the accumulated history of decisions that other people depend on. Systems like that have a shape, whether you name it or not.

Before AI, that shape was expensive to honor. Not impossible, but brittle. Too much glue logic. Too many edge cases that only existed in people’s heads. Too much operational load landing on whoever happened to remember how something worked last time. The system could exist, but only by leaning hard on humans.

AI didn’t change the nature of the system. It changed the cost of honoring it.

That only works, though, if the agent knows what kind of place it has been dropped into. Left uninitialized, a model does what it should do: it guesses. It reaches for familiar web patterns, popular frameworks, verbose abstractions, and defaults that are perfectly reasonable almost everywhere else. In this codebase, those guesses quietly create damage.

So the first thing I do is remove ambiguity.

The prompt I use isn’t a task list. It’s a boundary. It establishes, early and explicitly, that this is a system of record. If something isn’t represented inside the system, it does not exist. Side channels are not conveniences, they’re liabilities. Persistence, auditability, and predictable structure matter more than elegance or cleverness.

Once that premise is set, the rest of the architecture stops looking strange. Views are intentionally thin. Brokers carry the weight. Responses always have the same envelope. Variable names are compact on purpose. Exceptions are allowed to surface instead of being swallowed. These aren’t stylistic quirks. They’re load-bearing decisions made so the system can survive turnover, fatigue, and time.

The interesting part is that the actual prompt I feed the agent isn’t a bespoke incantation at all. It’s the developer onboarding guide for the project. The same document a human engineer would read to understand how things are wired, what invariants exist, and where deviation is costly.

That guide doesn’t just explain how the system works. It explains why certain modern practices are intentionally avoided, why some constraints exist that look arbitrary from the outside, and why consistency beats local optimization here. It encodes the grain of the system.

Once the agent has that context, its behavior changes in a way that’s hard to unsee. It stops proposing architectures that don’t exist. It stops trying to improve things by importing patterns that don’t belong. It starts producing code that looks like it was written by someone who has already paid the cost of learning this place.

This is where a lot of the “AI replaces X” conversation goes off the rails. In this setup, AI isn’t making decisions. It isn’t exercising judgment. It’s absorbing variance. It’s taking on the parts of the work that would otherwise require constant vigilance from humans just to keep things aligned.

The system sits in an uncomfortable intersection of money, editorial judgment, messy human workflows, and local reality. Hard automation breaks there. Purely manual operation burns people out. The agent helps normalize input, extend existing patterns without drifting, and reduce the friction of doing the right thing.

That only works because the agent has been situated. Not made smarter. Oriented.

I don’t think of this as prompt engineering. That framing implies clever phrasing and hidden levers. This is closer to onboarding. It’s the same move you’d make with a senior engineer: here’s the map, here are the invariants, here’s where the cliffs are. Now work within that, and don’t fight it.

The quiet payoff is that the code the agent produces belongs. It respects the response shapes. It follows the file layout rules. It avoids premature abstraction. It doesn’t just function, it fits. And code that fits is the difference between a system that accumulates debt and one that can keep going.

AI didn’t kill this kind of system. It made it viable without grinding people down.

But only because the collaboration is structured, the context is explicit, and the agent is treated not as an oracle but as a participant who needs orientation.

The prompt isn’t there to make the model smarter. It’s there to make the work survivable.